I give up for now, the upgrading process is broken and stuck in a loop…

I first upgraded the OS, then tried to upgrade Mediawiki from 1.29 to 1.35.

Had to temporarily drop lots of indexes or update.php would crash. These are their CREATE statements from the schema. When attempting to update again, drop those:

CREATE INDEX "cl_sortkey" ON "hg_categorylinks" ("cl_to","cl_sortkey","cl_from");

CREATE INDEX "cl_timestamp" ON "hg_categorylinks" ("cl_to","cl_timestamp");

CREATE INDEX "tl_from" ON "hg_templatelinks" ("tl_from","tl_namespace","tl_title");

CREATE INDEX "pl_namespace" ON "hg_pagelinks" ("pl_namespace","pl_title","pl_from");

CREATE INDEX "md_module_skin" ON "module_deps" ("md_module","md_skin");

CREATE INDEX "keyname" ON "hg_objectcache" ("keyname");

CREATE INDEX "qci_type" ON "awquerycache_info" ("qci_type");

CREATE INDEX "user_properties_user_property" ON "user_properties" ("up_user","up_property");

CREATE INDEX "img_usertext_timestamp" ON "hg_image" ("img_user_text","img_timestamp");

CREATE INDEX "img_size" ON "awimage" ("img_size");

CREATE INDEX "img_timestamp" ON "awimage" ("img_timestamp");

CREATE INDEX "img_sha1" ON "hg_image" ("img_sha1");

CREATE INDEX "ipb_address" ON "awipblocks" ("ipb_address","ipb_user","ipb_auto","ipb_anon_only");

CREATE INDEX "ipb_user" ON "awipblocks" ("ipb_user");

CREATE INDEX "ipb_range" ON "awipblocks" ("ipb_range_start","ipb_range_end");

CREATE INDEX "ipb_timestamp" ON "awipblocks" ("ipb_timestamp");

CREATE INDEX "ipb_expiry" ON "awipblocks" ("ipb_expiry");

CREATE INDEX "fa_name" ON "hg_filearchive" ("fa_name","fa_timestamp");

CREATE INDEX "fa_storage_group" ON "hg_filearchive" ("fa_storage_group","fa_storage_key");

CREATE INDEX "fa_deleted_timestamp" ON "hg_filearchive" ("fa_deleted_timestamp");

Afterwards update.php got stuck in a seemingly infinite loop, I waited two days. It uses 100% CPU and is updating the sqlite database every few seconds. The CLI says:

......

...skipping: index il_from doesn't exist.

...skipping: index iwl_from doesn't exist.

...skipping: index ll_from doesn't exist.

...skipping: index ls_field_val doesn't exist.

...skipping: index md_module_skin doesn't exist.

...skipping: index keyname doesn't exist.

...skipping: index qci_type doesn't exist.

...skipping: index ss_row_id doesn't exist.

...skipping: index ufg_user_group doesn't exist.

...skipping: index user_properties_user_property doesn't exist.

...comment table already exists.

...revision_comment_temp table already exists.

...have ar_comment_id field in archive table.

...img_description in table image already modified by patch patch-image-img_description-default.sql.

...have ipb_reason_id field in ipblocks table.

...have log_comment_id field in logging table.

...have oi_description_id field in oldimage table.

...have pt_reason_id field in protected_titles table.

...have rc_comment_id field in recentchanges table.

...rev_comment in table revision already modified by patch patch-revision-rev_comment-default.sql.

...have img_description_id field in image table.

Adding fa_description_id field to table filearchive ...done.

Migrating comments to the 'comments' table, printing progress markers. For large

databases, you may want to hit Ctrl-C and do this manually with

maintenance/migrateComments.php.

Beginning migration of revision.rev_comment to revision_comment_temp.revcomment_comment_id

... 105

... 208

... 321

... 442

... 542

... 649

... 752

... 852

... 954

... 1058

... 1209

... 1316

... 1421

... 1525

... 1626

At the moment it writes many many identical rows to the comment table:

> comment_id,comment_hash,comment_text,comment_data

> 336742,1784768939,-31,

> 336743,1453543653,-921755,

> 336744,-1964503665,-656859,

> 336745,-418896487,-812338,

> 336746,1784768939,-31,

> 336747,1453543653,-921755,

> 336748,-1964503665,-656859,

> 336749,-418896487,-812338,

> 336750,1784768939,-31,

> 336751,1453543653,-921755,

> 336752,-1964503665,-656859,

> 336753,-418896487,-812338,

> 336754,1784768939,-31,

> 336755,1453543653,-921755,

...

> 923945,-418896487,-812338,

> 923946,1784768939,-31,

> 923947,1453543653,-921755,

> 923948,-1964503665,-656859,

> 923949,-418896487,-812338,

> 923950,1784768939,-31,

> 923951,1453543653,-921755,

> 923952,-1964503665,-656859,

> 923953,-418896487,-812338,

> 923954,1784768939,-31,

> 923955,1453543653,-921755,

> 923956,-1964503665,-656859,

> 923957,-418896487,-812338,

According to #mediawiki that should not happen and might hint at a bug in the update process. Yay.

A suggestion is to upgrade in single increments as that might be safer, so 1.29 → 1.30 → 1.31 etc until reaching a version where upgrading to the latest LTS is officially supported. But from my experience, even then it might fail because they sadly don’t care much about other databases than MySQL.

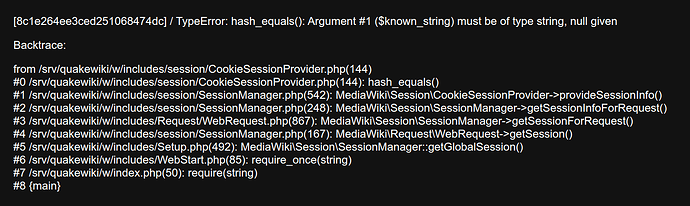

I cannot justify sinking more time into this now. If someone else wants to give it a try or even take over the site entirely, please contact me. You will also have to upgrade the OS (easy, just Ubuntu 20 to 22 to 24), though I can jump in for that if you want. You will also have to check how to get a modern version of the skin (Monaco, https://github.com/haleyjd/monaco-port (DoomWiki’s) or maybe https://github.com/Universal-Omega/Monaco). Otherwise I might just turn it into a static archive, so it is not a worthy target for exploitation due to the old version…